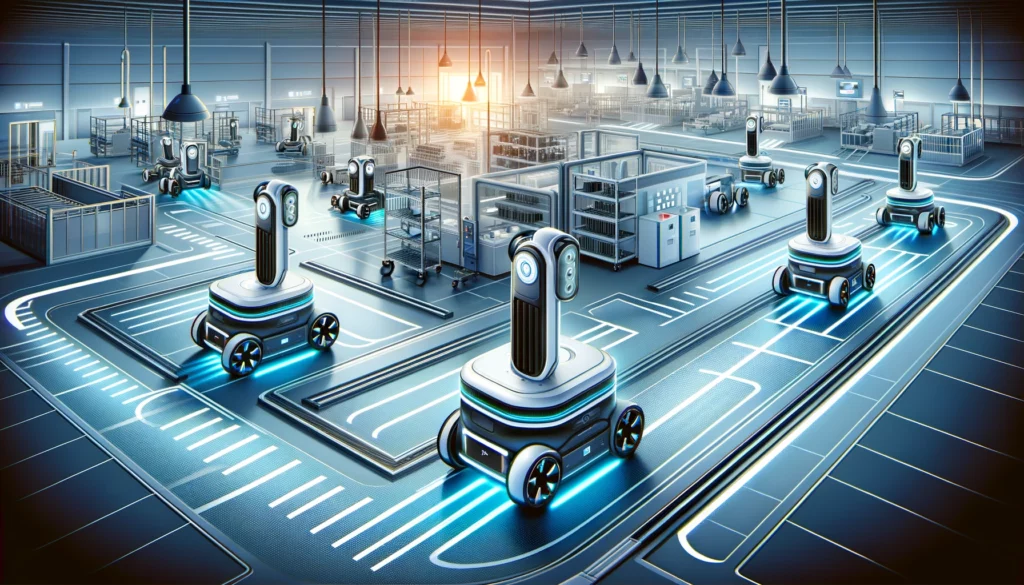

Ever since the Industrial Revolution, mankind has been increasingly pushing to get machines to do our work for us. First, it was steam; then, electricity. Now, we’re at a stage where we can develop autonomous robots that get the job done from start to finish. These autonomous mobile robots (AMRs) mark an important leap forward, not just in robotics, but in our collective approach to work, life, and technological innovation.

However, these robots still face limitations and one significant bottleneck in industrial robotics is the extensive time and specific programming required for robots to learn new tasks like object grasping and product assembly. By leveraging deep reinforcement learning, a team of researchers has now introduced innovative algorithms that substantially reduce the training time for robots to acquire new skills.

Why AMRs are coming in now

Mobile robots have pioneered automation in environments ranging from manufacturing floors to warehouse aisles and beyond. Unlike their stationary counterparts, mobile robots offer the unique advantage of mobility, enabling them to perform tasks across different locations.

These intelligent machines navigate dynamic environments without human intervention, leveraging sophisticated sensors, artificial intelligence (AI), and machine learning algorithms.

Subsequently, these algorithms, enhanced with computer vision and machine learning tools, enable robots to quickly adapt to grasping and assembling various objects. However, autonomous robots are entering the stage now primarily due to significant advancements in technology, including leaps in artificial intelligence (AI), sensor technology, and computational power, coupled with the increasing availability and affordability of these technologies.

Better senses

Sensor technology is crucial in the development and operation of autonomous robots, acting as the eyes and ears of these machines. Sensors enable robots to perceive their environment, navigate spaces, identify objects, and interact safely with humans and other obstacles. This sensory input is fundamental for autonomous decision-making, allowing robots to adapt to dynamic conditions, avoid hazards, and execute tasks with precision.

Without advanced sensor technology, robots would be unable to autonomously understand or react to their surroundings, severely limiting their functionality and reliability. The integration of diverse sensors not only enhances a robot’s ability to operate in complex environments but also ensures the safety and efficiency of its actions.

Among the plethora of sensors equipped in autonomous robots, some of the most critical include lidar (Light Detection and Ranging) sensors, cameras, ultrasonic sensors, and inertial measurement units (IMUs). Lidar sensors provide detailed 3D maps of the robot’s environment, enabling precise navigation and obstacle detection. Cameras, especially when combined with computer vision algorithms, allow robots to identify objects, read signs, and understand context. Ultrasonic sensors offer another means of detecting obstacles by emitting sound waves and interpreting their echoes, useful in tight spaces where other sensors might be less effective. Lastly, IMUs track the robot’s movement and orientation by measuring acceleration and rotational rates, crucial for maintaining stability and accurate positioning.

Together, these sensors empower autonomous robots to perform a wide range of tasks, from navigating busy warehouse floors to safely driving on public roads, marking a new era in robotic autonomy and capability.

All this requires a lot of processing power and adaptability

Simply navigating an environment is tough enough. Doing a designated robot job is even tougher.

Artificial Intelligence (AI) is pivotal for autonomous robots as it imbues them with the ability to learn from and adapt to their environments, making decisions with minimal human intervention. Through AI, robots can process vast amounts of data from sensors in real time, recognizing patterns, obstacles, and opportunities for interaction within their operational domains. AI also facilitates the continuous improvement of robotic systems through machine learning, allowing robots to become more efficient and autonomous over time.

This is exactly what the authors of a new study are proposing.

“This paper proposes a deep reinforcement learning-based framework for robot autonomous grasping and assembly skill learning,” Chengjun Chen, Hao Zhang and their colleagues wrote in their paper.

The effectiveness of this approach was validated through both simulations and real-world experiments using robotic arms, achieving high success rates in grasping and assembly tasks. This advancement promises to streamline the programming of industrial robots, making them more flexible and efficient in supporting human workers in manufacturing processes, with plans for future research to further refine and test the technology on common industrial tasks.

“The effectiveness of the proposed framework and algorithms was verified in both simulated and real environments, and the average success rate of grasping in both environments was up to 90%. Under a peg-in-hole assembly tolerance of 3 mm, the assembly success rate was 86.7% and 73.3% in the simulated environment and the physical environment, respectively,” the researchers wrote in their paper.

This is encouraging but there is still plenty of room left for improvement.

“In future work, we will improve the hole detection accuracy and domain randomization of the shape and image of the holes in the virtual environment, optimize the strategy from the simulation environment to the physical environment, and reduce errors in both stages to improve the assembly success rate of in the physical environment,” the researchers concluded.